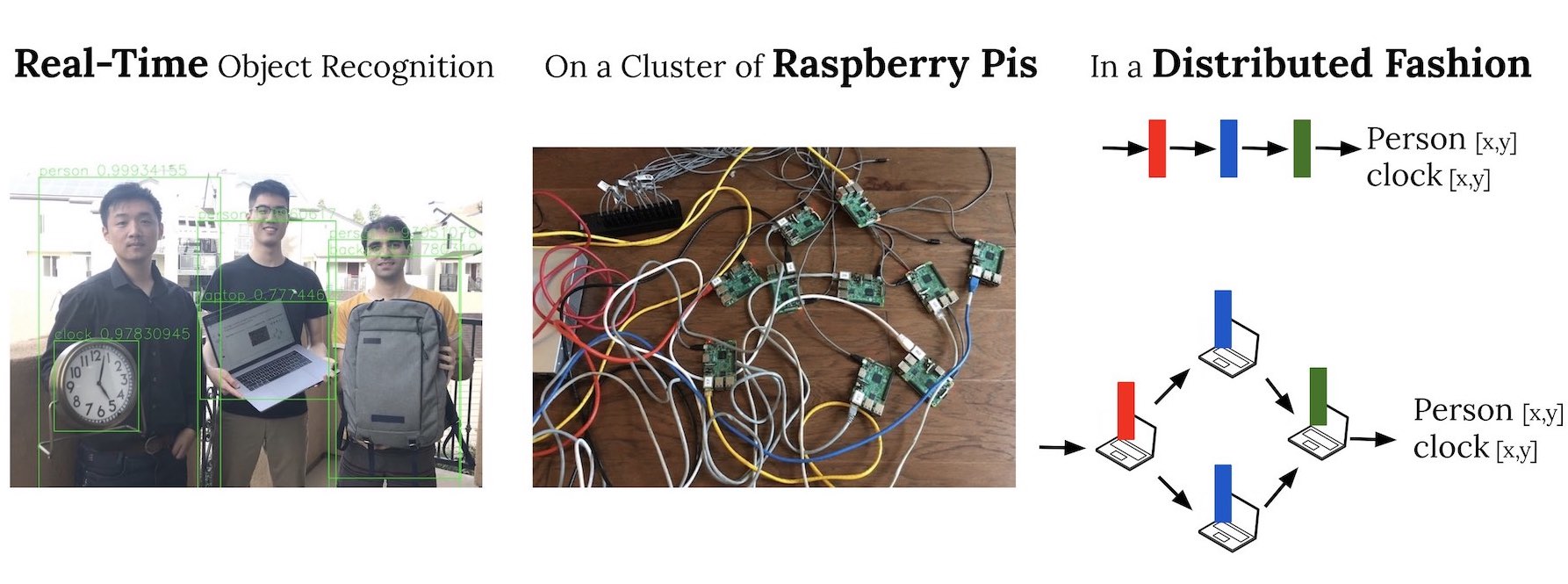

An Edge-Centric Scalable Intelligent Framework To Collaboratively Execute DNN

Jiashen Cao, Fei Wu, Ramyad Hadidi, Lixing Liu, Tushar Krishna, Micheal S. Ryoo, Hyesoon Kim

HPArch Lab

Motivation

The widespread adaptation of deep neural network (DNN) to solve new problems enables us to tackle several ordinary tasks. Since DNN execution is inherently compute intensive and resource hungry, it requires a high-performance computing system or a cloud-based provider. These approaches lead to an increased risk of losing privacy as well as a strong dependency on the network connectivity. On the other hand, the ever-increasing number of Internet of Things (IoT) and edge devices being integrated in our daily lives creates a great platform for DNN execution. However, the demanded computational power from resource-hungry DNN-based applications and manufacturing costs of IoT devices, limit the integration of DNNs in these devices.

Introduction

Our studies propose to distribute the entire DNN model onto multiple resource-constraint devices so that we can reduce computation pressure and memory usage. Each device handles a certain task or, in other words, is in charge of a portion/entire layer of a DNN model.

Our Framework

Hardware

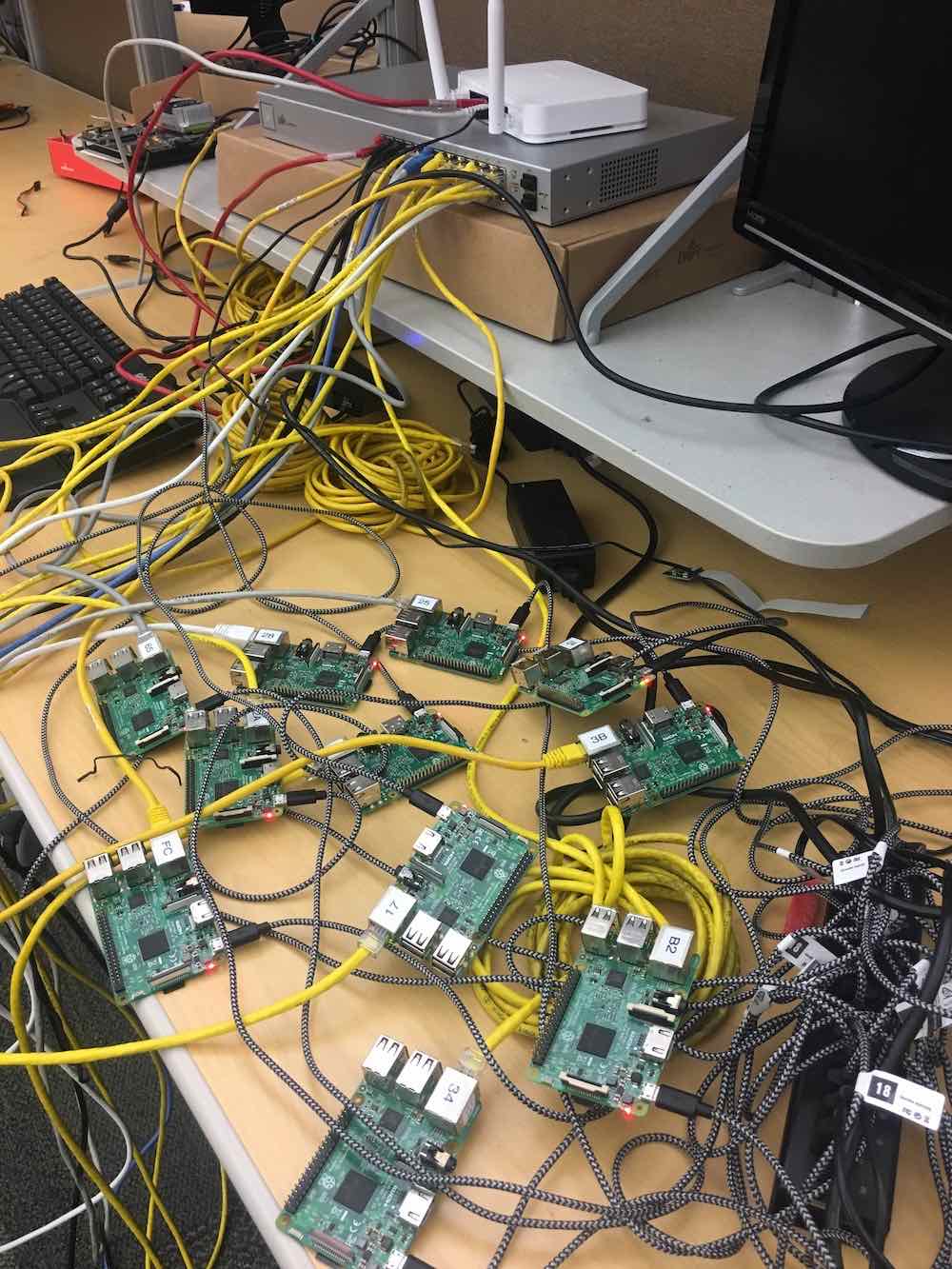

We use up to 9 Raspberry Pis in our system to compute deep learning computation.

Software

To ease the trouble of managing many devices, we use Docker and Kubernetes to deploy and manage different services. However, this framework works only for lightweight models. Heavy models like YOLO are not able to fit into Docker container. In those cases, services are still running on bare bone devices and virtualization are only used for intermediate nodes.

Results

1 Raspberry Pi

We use single Raspberry Pi 3 as our baseline. On single Raspberry Pi, it takes around 15 seconds to process 1 image. Because single Raspberry Pi is not pipelined, it is not able to hide the latency for each image.

8 Raspberry Pi

By using the technique we proposed, the system is able to achieve a better throughput rate of about 0.5 frames per second. Because our proposed system is pipelined, it could hide the image processing latency to some extent. For more information, please check the references, or check our publications for an updated list.